MBTI ‘aha!’ moments and ROI – where is the evidence?

sitecore\Richard Stockill - R&D Consultant at OPP - and Alice King - Principle Consultant at OPP

Having both been members of OPP’s Training team many moons ago, between us we have trained literally thousands of MBTI practitioners. We both love hearing the ‘aha!’ or ‘lightbulb’ moments when individuals experience the profound insights that the MBTI framework can bring.

We know that these insights can lead to increased awareness, such as valuing the diversity of people’s views, or developing a more flexible approach to working with others. However, are anecdotal reports of great impact enough to convince us as consultants, and our community of qualified practitioners, that MBTI interventions are in fact worthwhile investments?

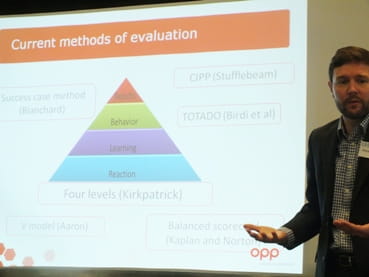

We recently ran a workshop at the BAPT conference (see photo below) on evaluating MBTI-based interventions. There was a real emphasis at BAPT in general on how important it is to evidence impact: in a cash-poor L&D environment where budgets are increasingly squeezed, being able to demonstrate that your events have a real and tangible output, in addition to a clear link to business benefits, is paramount. We also know from previous research that the act of evaluating or observing someone’s learning often actually improves the transfer of that learning to the workplace (often referred to as the ‘stickiness’ factor). A quick poll of the MBTI practitioners at our workshop told us that everyone had considered evaluating their type events, and some were doing so regularly; and yet many were unsure of what constituted an evaluation, the best way to go about it, or what to do with the data!

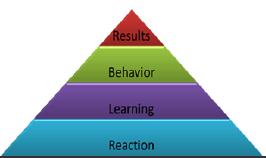

So how can we help? Techniques used to evaluate MBTI impact range from qualitative review using interviews to quantitative data collection using questionnaires. There are also training evaluation models, these help in defining the evaluation process. One we have particular experience of comes from Donald Kirkpatrick and his theory of learning and training evaluation. OPP recently helped a client from the healthcare industry embed the MBTI framework into their organisation, then used this model to assess whether they had achieved key organisational objectives around communicating and influencing and increasing innovation. We shared our learning from this at our workshop.

Kirkpatrick’s model encourages assessors to look beyond the immediate reaction to training, and extend evaluation into the learning, behavioural change and results/performance stages. It also helps you identify which types of data to collect in relation to your goals.

With our client, the different stages were measured by:

- Reaction: participants completed attitude surveys immediately after their training.

- Behaviour: participants completed a questionnaire focused on several relevant competency ratings. Participants’ managers also rated them on the same competencies. This was done several weeks before the workshop, then again several weeks after the workshop.

- Results: participants completed an impact audit using a questionnaire; this was done several weeks after the workshop.

The study we conducted found that 82% of the participants in MBTI development believed its impact would be large; that all competencies the workshop focused on showed a positive change (influence, communications, client focus, teamwork); and that there was a perceived organisational impact on a range of factors including productivity and engagement.

Our key learning from this experience is to keep it simple. ROI doesn’t need to be complicated to be meaningful. Our top tips are:

- Be really clear about the outputs/objectives, and liaise with key stakeholders in order to define these so that what you measure is clear and relevant

- Measure whether the stated objectives have been achieved using a pre- and post-workshop evaluation, so as to benchmark current performance against future improvements

- Take time to engage the intended recipients of any evaluation material as well as the stated ‘client’/key stakeholder – this will get you better quality data

- Be explicit about the benefits of evaluating the work, so that those involved are on board and prepared to invest the extra time that evaluation can take

During our workshop we explored the challenges our participants faced in evaluating the impact of type events, and how to increase engagement in measuring ROI. We heard that practitioners wanted to:

During our workshop we explored the challenges our participants faced in evaluating the impact of type events, and how to increase engagement in measuring ROI. We heard that practitioners wanted to:

- Build their confidence in designing evaluation work, and test out new approaches to collecting data

- Gain clarity on the benefits of evaluating work, from both a learning and a strategic perspective

- Provide a clear rationale for what is being measured, and communicate this to all

- Receive fair payment for measuring ROI, and not just do it as a free extra!

So, where does this leave us now? Following these great discussions, we felt excited about helping our practitioners better understand the impact of type-based events, and knowing what is actually changing for individuals. In this vein, we would be open to exploring further opportunities to collaborate with MBTI practitioners in evaluating ROI. So, if this is an area of concern or special interest, please do get in touch with Richard at Richard.stockill@opp.com. Or, if you have a success story to share with other practitioners, let us know!